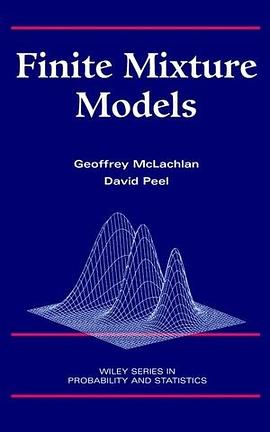

Finite Mixture Models pdf epub mobi txt 电子书 下载 2026

- 数学

- 高斯混合模型

- 算法

- 优化

- Text-as-Data

- 统计建模

- 混合模型

- 聚类分析

- 机器学习

- 概率模型

- EM算法

- 贝叶斯方法

- 数据分析

- 模式识别

- 统计推断

具体描述

An up-to-date, comprehensive account of major issues in finite mixture modeling

This volume provides an up-to-date account of the theory and applications of modeling via finite mixture distributions. With an emphasis on the applications of mixture models in both mainstream analysis and other areas such as unsupervised pattern recognition, speech recognition, and medical imaging, the book describes the formulations of the finite mixture approach, details its methodology, discusses aspects of its implementation, and illustrates its application in many common statistical contexts.

Major issues discussed in this book include identifiability problems, actual fitting of finite mixtures through use of the EM algorithm, properties of the maximum likelihood estimators so obtained, assessment of the number of components to be used in the mixture, and the applicability of asymptotic theory in providing a basis for the solutions to some of these problems. The author also considers how the EM algorithm can be scaled to handle the fitting of mixture models to very large databases, as in data mining applications. This comprehensive, practical guide:

* Provides more than 800 references-40% published since 1995

* Includes an appendix listing available mixture software

* Links statistical literature with machine learning and pattern recognition literature

* Contains more than 100 helpful graphs, charts, and tables

Finite Mixture Models is an important resource for both applied and theoretical statisticians as well as for researchers in the many areas in which finite mixture models can be used to analyze data.

Table of Contents

General Introduction.

ML Fitting of Mixture Models.

Multivariate Normal Mixtures.

Bayesian Approach to Mixture Analysis.

Mixtures with Nonnormal Components.

Assessing the Number of Components in Mixture Models.

Multivariate t Mixtures.

Mixtures of Factor Analyzers.

Fitting Mixture Models to Binned Data.

Mixture Models for Failure-Time Data.

Mixture Analysis of Directional Data.

Variants of the EM Algorithm for Large Databases.

Hidden Markov Models.

Appendices.

References.

Indexes.

作者简介

GEOFFREY McLACHLAN, PhD, DSc, is Professor in the Department of Mathematics at the University of Queensland, Australia.

DAVID PEEL, PhD, is a research fellow in the Department of Mathematics at the University of Queensland, Australia.

Reviews

"This is an excellent book.... I enjoyed reading this book. I recommend it highly to both mathematical and applied statisticians." (Technometrics, February 2002)

"This book will become popular to many researchers...the material covered is so wide that it will make this book a standard reference for the forthcoming years." (Zentralblatt MATH, Vol. 963, 2001/13)

"the material covered is so wide that it will make this book a standard reference for the forthcoming years." (Zentralblatt MATH, Vol.963, No.13, 2001)

"This book is excellent reading...should also serve as an excellent handbook on mixture modelling..." (Mathematical Reviews, 2002b)

"...contains valuable information about mixtures for researchers..." (Journal of Mathematical Psychology, 2002)

"...a masterly overview of the area...It is difficult to ask for more and there is no doubt that McLachlan and Peel's book will be the standard reference on mixture models for many years to come." (Statistical Methods in Medical Research, Vol. 11, 2002)

"...they are to be congratulated on the extent of their achievement..." (The Statistician, Vol.51, No.3)

目录信息

读后感

评分

评分

评分

评分

用户评价

我从一个计算机科学背景出发阅读此书,主要关注的是计算效率和模型的可扩展性。这本书在算法复杂度和可扩展性方面的讨论,虽然不如一些专门针对大规模计算的文献那样深入,但它为构建高效算法奠定了坚实的理论基础。例如,当讨论到高斯混合模型(GMM)的计算瓶颈时,书中对矩阵分解和迭代优化的提及,帮助我理解了为什么需要转向期望条件最大化(ECM)或直接使用梯度下降法来逼近最优解。令我感到惊喜的是,作者甚至探讨了半监督学习环境中如何利用混合模型进行数据标注,这跨越了传统的统计学范畴,进入了机器学习的前沿领域。书中对模型结构识别的探讨,也让我意识到,在数据量巨大但标注稀疏的情况下,如何设计正则化项来约束模型复杂度,避免生成过多不必要的“簇”,是部署实际应用时的关键挑战。这本书的视角是多维度的,它不仅仅关注“模型是什么”,更关注“如何让模型在受限的计算资源下工作得更好”。

评分这本书的排版和案例选择,非常适合那些希望从理论走向应用、但又对“黑箱”算法感到不适的统计学入门研究人员。它没有像一些教科书那样堆砌过于抽象的数学符号,而是巧妙地将理论推导嵌入到可理解的统计情境之中。例如,在解释期望最大化(EM)算法时,作者没有直接给出繁复的矩阵求导,而是通过一个清晰的、分两步走的直观解释——“先假设我们知道隐变量,再根据这个假设更新参数”——来引导读者理解其迭代收敛的本质。此外,书中丰富的R语言或Python代码片段(虽然我更偏爱后者进行验证)配图,使得读者可以立即将学到的知识应用到真实的数据集上,感受模型拟合的过程,而不是仅仅停留在纸面理解。特别值得称赞的是,书中关于缺失数据处理的部分,它将混合模型作为一种强大的插补工具进行了介绍,这在处理现实中普遍存在的报告不完整问题时极具实用价值。这种将理论严谨性与实际操作便利性完美结合的处理方式,是此书最吸引读者的特质之一。

评分这本书的叙事风格有一种沉稳而权威的气质,它仿佛在引导一位有志于在统计建模领域深耕的学者,完成一次从基础构建到前沿探索的旅程。它并不迎合那些只求快速上手应用的读者,而是要求读者对统计推断的底层逻辑有起码的尊重和理解。我特别欣赏作者在处理模型选择的哲学困境时所展现出的审慎态度。面对过拟合的诱惑,书中对信息论方法和交叉验证的对比分析,清晰地指出了不同方法背后的偏倚和方差权衡。这使得读者在面对实际项目时,能够做出更有根据的判断,而不是盲目地依赖某个默认的准则。此外,书中对混合模型在混合效应模型(Mixed-Effects Models)中的延伸应用进行了简要但关键的介绍,这为我理解复杂的纵向数据分析提供了新的思路。总而言之,这是一本需要反复研读的书籍,其价值随着阅读次数的增加而愈发显现,它教会的不仅仅是模型本身,更是一种严谨的、分层的、面向复杂性的建模思维方式。

评分这本关于有限混合模型的专著,从我一个深度学习研究者的角度来看,确实是一部值得细细品味的力作。它没有简单地停留在对基本概念的罗列上,而是深入剖析了各种经典与现代混合模型背后的数学逻辑和统计推断框架。尤其令我印象深刻的是,作者在处理高维数据和模型选择问题时所展现出的细腻和洞察力。比如,书中对贝叶斯非参数混合模型——特别是狄利克雷过程混合模型(DPM)——的阐述,不仅清晰地构建了其概率图模型,还详细对比了截断式近似(如Polya Urn Scheme)与Gibbs采样的实际操作差异和计算复杂度。对于我们处理大规模、异构数据集的工程师而言,理解这些差异至关重要,因为它们直接决定了模型的收敛速度和最终的解释性。书中对于马尔可夫链蒙特卡洛(MCMC)方法在混合模型参数估计中的应用也进行了详尽的讨论,从Metropolis-Hastings到Hamiltonian Monte Carlo(HMC),每一种算法的适用场景和收敛诊断标准都介绍得非常到位,这使得读者能够根据具体业务需求选择最合适的推理引擎。我尤其欣赏作者在章节末尾设置的“实践反思”部分,它常常引导我们思考理论模型在真实世界数据噪声和模型假设不完全匹配时的局限性。

评分作为一名专注于时间序列分析的计量经济学学生,我发现这本书在处理非独立同分布数据结构时,展现出了令人耳目一新的视角。传统的ARIMA或GARCH模型往往假设时间序列的均值或方差结构是固定的,但现实中的金融市场或宏观经济变量,其潜在的“政权”或状态往往是缓慢转换的,这本书恰好提供了解决这类问题的理论工具。书中关于隐马尔可夫模型(HMM)的扩展讨论,特别是如何将其融入到更复杂的混合线性模型框架中,为我分析波动率聚类现象提供了新的数学语言。作者并未满足于展示如何拟合模型,而是花费了大量篇幅来探讨状态转换矩阵的估计精度,以及如何利用信息准则(如AIC、BIC,甚至更复杂的WAIC)来确定最优的状态数,避免过度拟合市场噪音。更进一步,书中对混合模型的渐近性质和一致性证明的论述,虽然略显晦涩,但为我们这些需要撰写严谨的学术论文的读者提供了坚实的理论后盾。这本书的深度使得它不仅仅是一本工具书,更像是一本关于“如何科学地划分复杂现象”的哲学指南。

评分叹气;明天!就是明天!

评分叹气;明天!就是明天!

评分叹气;明天!就是明天!

评分叹气;明天!就是明天!

评分叹气;明天!就是明天!

相关图书

本站所有内容均为互联网搜索引擎提供的公开搜索信息,本站不存储任何数据与内容,任何内容与数据均与本站无关,如有需要请联系相关搜索引擎包括但不限于百度,google,bing,sogou 等

© 2026 onlinetoolsland.com All Rights Reserved. 本本书屋 版权所有